Problem

- Consider your product is going through a big overhaul and re-architecturing where the existing microservices might be broken into multiple ones or merged to single ones, there will be a big jumble of mess of routes at the ingress level.

- This will make transitioning a bit hard as using canary based routing will gradually become complicated. So to simplify this canary setup, we take the routing matter into our own hands.

- If you can use Consul and it works right for you, that might be the better option as Consul is more mature and production ready. You will be less likely to break things.

The Solution

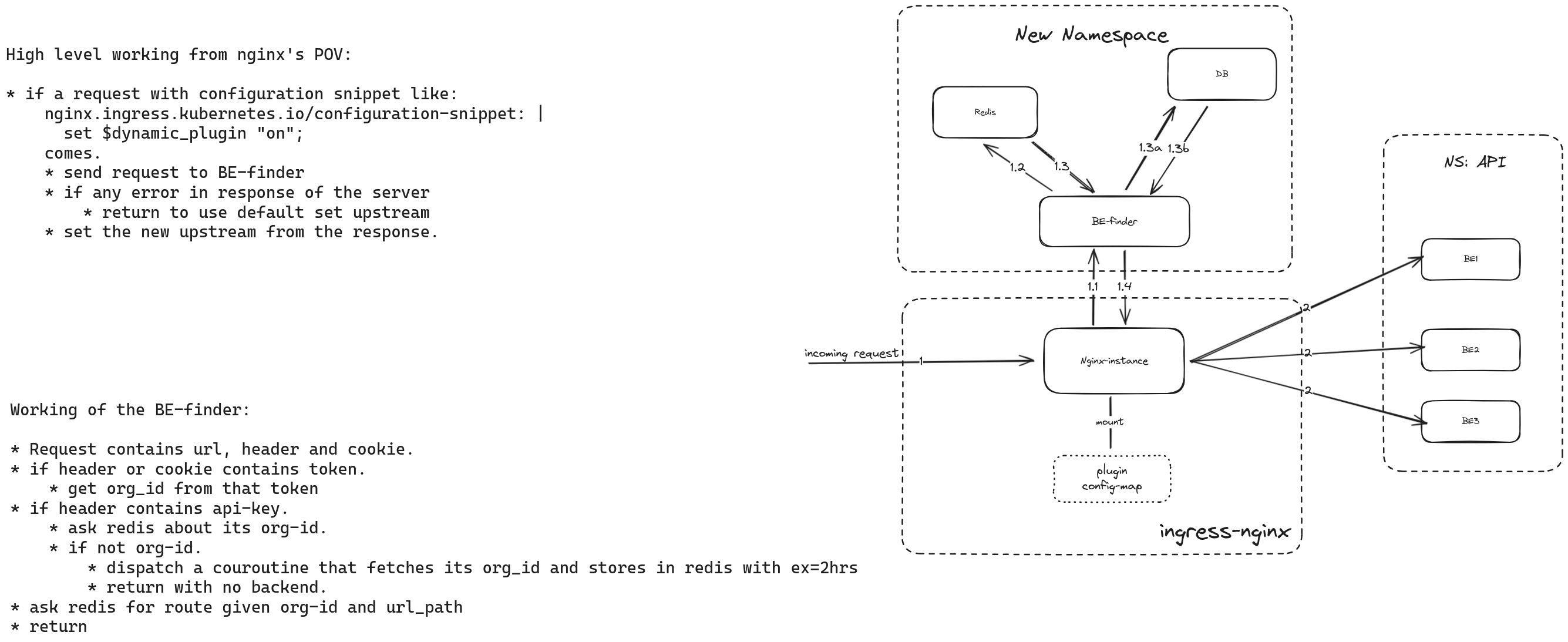

- Architecture proposed:

Implementation

- Its Almost same as auth implementation but will require a bit of scripting as we are not just checking status code to return 401 or to allow the traffic.

- This can be easily achieved with configuration snippet annotation in ingress-nginx.

- We write a configuration snippet that sends request to the server that is responsible for getting the route information from the request body. Which more or less looks like one below:

| |

- This will match for path

/api/v1/docs1/docs/custom/and send request to the server to get backend information. Which in turn will be used to populateproxy_upstream_name - Don’t forget to set

ngx.ctx.balancerto nil at last. Read here

Enhancement

- This for POC looks good but for real world application we try to make it as frictionless as possible.

Creating a ingress-nginx plugin

- We create a plugin that does all of this and a flag that will determine weather to run this plugin or not for each ingress.

| |

- As we can see there is a flag up top

dynamic_pluginthat will be set in each ingress as a configurations snippet to trigger the execution of this script in each request. - The plugin code will be written in config-map and will be mounted to ingress controller kubernetes pod as a lua file in the plugins directory.

Mounting the plugin

- We add the volume mount in out controller deployment by adding following lines in the right place:

| |

- More about creating a nginx lua plugin here.

Running the plugin

- Create a new ingress, omitting the lua part and adding a configuration snippet annotation that sets the flag written in the plugin lua.

- The annotations will be like:

| |

- Now each request sent to the ingress with this variable set will have its route dynamically created as per your requirement.

The BE-finder server

- This is the backbone of your ingress, so should be as fast as possible. Thus it should be written in efficient languages like Rust, Golang or C/C++. I used Go.

- The application will use redis or inmemory cache to speed things if your data does not change frequently. If this does not suite your needs, you can setup cron like system that triggers refresh of keys in the cache periodically.

- The cache miss will trigger a goroutine that fetches the information from database for the next request to pick up.

Conclusion

- We do not need complicated setup with service meshes to get intelligent routing.

- Performance will be better than service mesh based solution.

- Easy to setup and use.